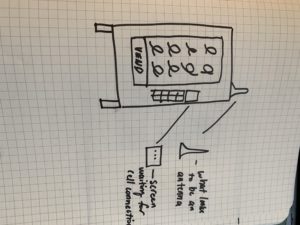

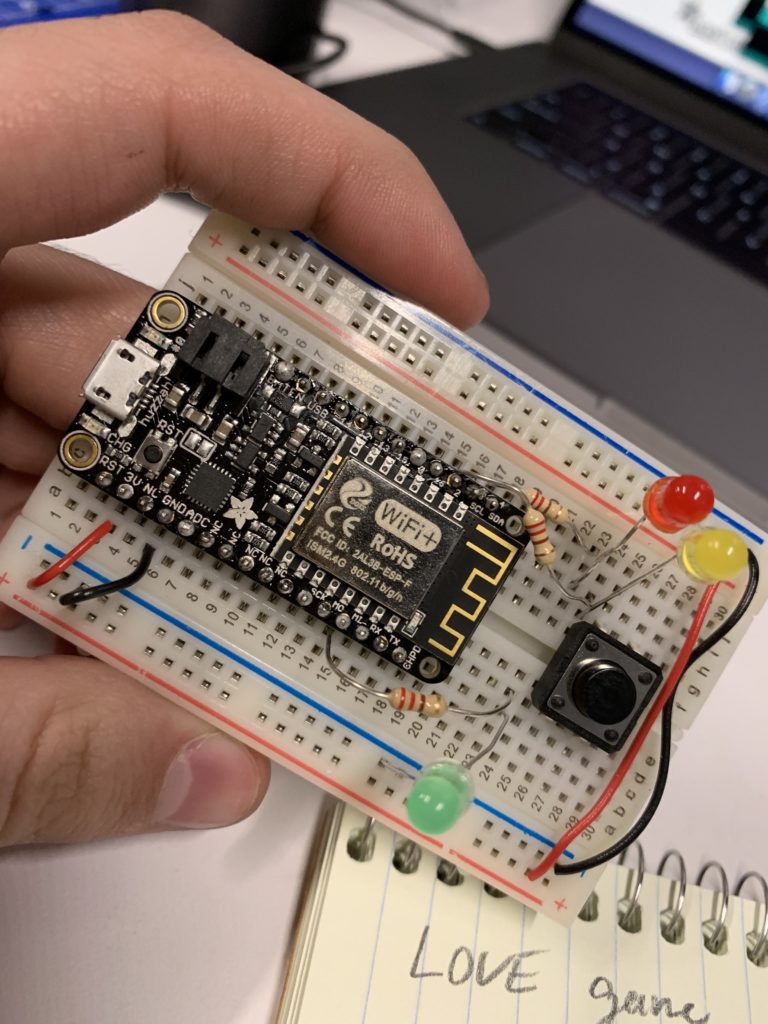

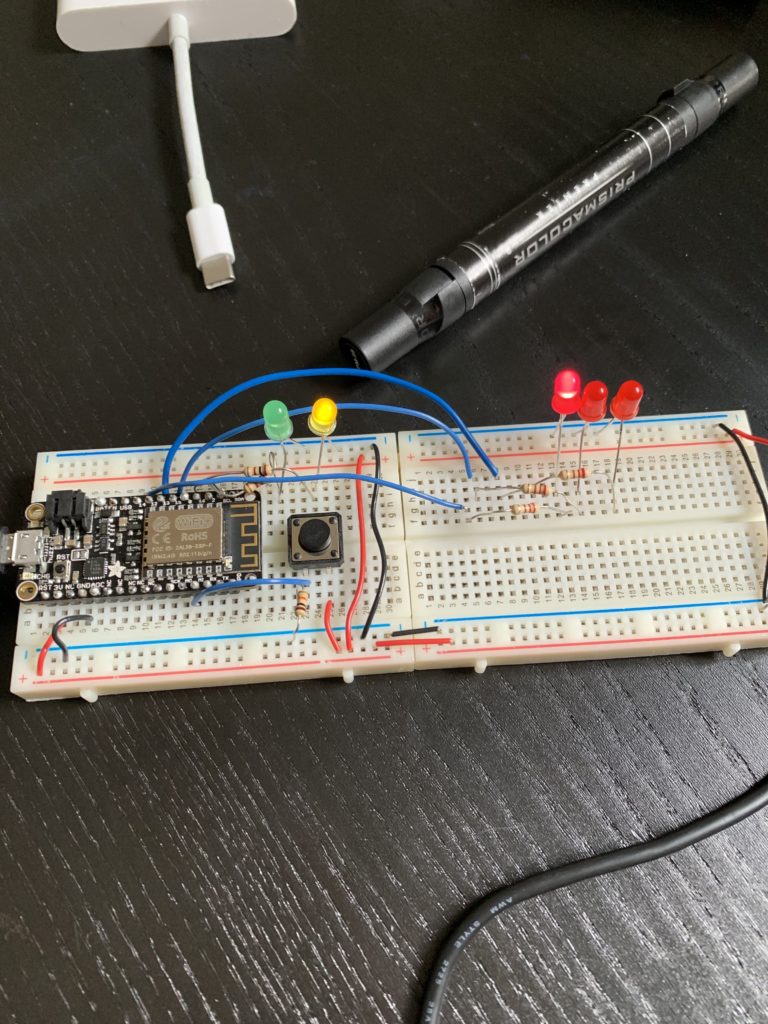

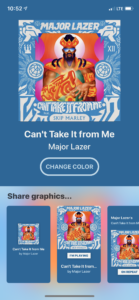

Here’s my final project. I hooked up the adafruit neopixel lights to my share music graphics app that’s on the App Store. https://itunes.apple.com/us/app/share-music-graphics/id1446148157?mt=8

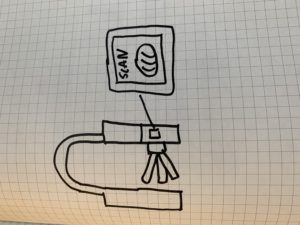

The app would ping the Apple Music API for their colors that they associate most with the album cover, and then sets the background color of the app. As soon as the background color is set, then the app sends a post request to Adafruit Data Broker service creating a string of the background color’s RGB and then the Arduino would take that string and then parse it, turn it into and integer and then set the RGB values accordingly. It was a little tricky getting the Arduino with the string to do these which is why I had to add X’s into the string when a value was missing because of how strings are char [] arrays on the Arduino board.

Here’s the link to the code that I wrote: https://gist.github.com/Alexs2424/f9ac29a5af45a0bb8a99bea4a9c9179b

Here’s the swift version: https://gist.github.com/Alexs2424/d583552f8664c0d82d6932fe1df918e0

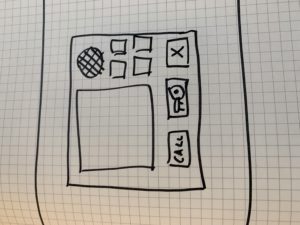

Then if you hit the change color button on the app like it is on the ui, whatever the background color is, it changes to the light strip. I really liked this because this was really interesting to do more of an installation piece, where the Arduino does something in real time. I loved figuring it out and seeing how fast the data broker can be sometimes, it’s super fast for all the multitude of services, requests, that the device goes through, it’s pretty mind-boggling that everything can work that fast.

It didn’t work in class because two of the wires were confused and switched around, which was completely my fault. And to learn to have the demo hooked up before you go on stage, and also when you get it to work to take. Video of it.

Here’s the link to a video of all of it going on, in the app and the lights changing in real-time.

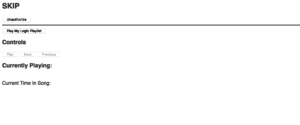

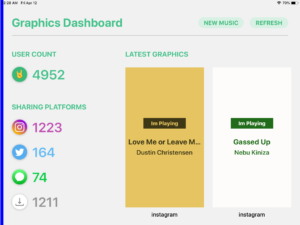

This is a screenshot of our dashboard with some of the real time statistics that we have now and might be incorporated in some form of the final project.

This is a screenshot of our dashboard with some of the real time statistics that we have now and might be incorporated in some form of the final project. Sign up on our website at http://noisehub.co.

Sign up on our website at http://noisehub.co.