1.) LaGuardia Southwest Check-In

First, the LaGuardia renovation is all that you hoped for and more – no more “bodega with planes” vibe. But onto devices!

Security at airports bears the brunt of travel-tech criticism, for good reason – the TSA is horrifically inefficient and ineffective, and just the term “millimeter wave scanner” alone sounds like it was the brainchild of some TV-Trope mad scientist trying to come up with the most physics-sounding jumble of words possible.

But let’s talk about the second most source of travel ire: boarding.

First, you have the actual herding-cattle exercise that is lining up to board, which, depending on your airline, can be meh or hell. Southwest’s is the most-least-illogical I’ve seen so far, grouping you into A’s and B’s and C’s and then having you arrange yourselves according to your boarding number in neat segments of fives –

Overall, the boarding process was probably the result of some multi-million dollar consultancy with a lot of random bits of phycology thrown in their for good measure.

But it’s all for naught if the lynchpin network device fails. The humble barcode scanner:

Again, as with the boarding processes I discussed above, shit works. I don’t want to undersell what was and is a Herculean effort on the part of the Southwest engineers who have to maintain the airline’s legacy systems, likely written in some godawful legacy language like FORTRAN in the 80’s.

But that’s table stakes, not medal-worthy. Let’s talk about the experience a bit: what message are you communicating when, at a checkout, you scan not your products, but your customers? Hopefully in the near future Amazon will bring their checkout-less technology to airports and we can just waltz on planes like humans instead of cattle.

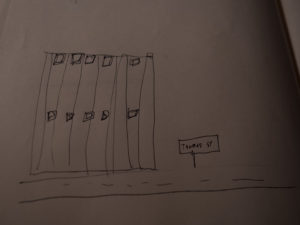

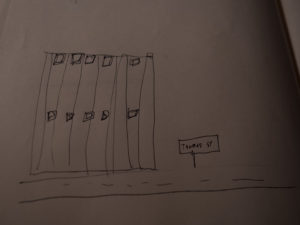

2.) 33 Thomas Street

This is a little meta, but consider the strange, windowless building in lower Manhattan:

It seems (if you look at actual pics and not my shitty drawing) to be the opposite of a “networked” building – no windows, and the Brutalist architecture makes it seem less like a building where things happen and more like a 29-story rock that just landed there.

But, more than any one place in the city, I think it has the title of “most networky” place in Manhattan.

The building handles routing for AT&T’s long-distance phone network, and manages a lot of other communications data. A power failure in the building in 1991 interrupted nearly 10 million phone calls and pretty much all air traffic control at 400 of America’s airports. The NSA allegedly (thanks Snowden) monitors the communications of the UN, the World Bank, and forty or fifty countries from this building. It’s amazing to me, considering the building’s architecture, how self-effacing it is about it’s purpose: What is physically the most closed-off building in the city is in fact the most networked.

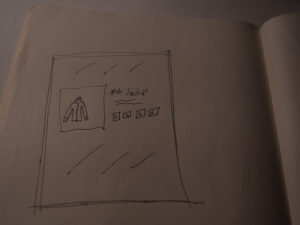

3.) Rebecca Minkoff Dressing-Room “Smart Mirrors”

Think about the experience of trying clothes on in a dressing room at a store (us gals may find this more of a problem then men, judging by the studies on gender and fashion retail but w/e). You’re trying on what looks like a perfect pair of pants…but they’re too big. In a normal shopping experience, you’d have to take those off, put your clothes back on, and scramble around the store looking for a sales associate to help you find a different size.

Not here. Rebecca Minkoff’s stores are outfitted with smart touchscreen mirrors in their dressing rooms.

Need another size? Order it via the screen, and they’ll appear outside your fitting-room door in a few minutes. Want accessory recommendations for the outfits you have with you? The system can do that too. When it’s time to check out, you can use the interface too, thanks to RFID chips in the clothes.

The numbers show this is working economically: customers who use the experience purchase 30-40% more than the average customer. And it’s hard to understate another point about this: they beat Amazon to self-checkout. In the fashion industry, which is about as legacy and non-innovative on the whole as airlines are (when it comes to customer-centric approaches, at least).