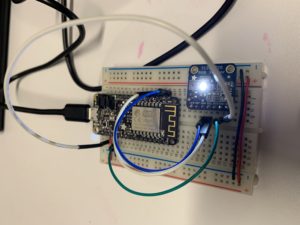

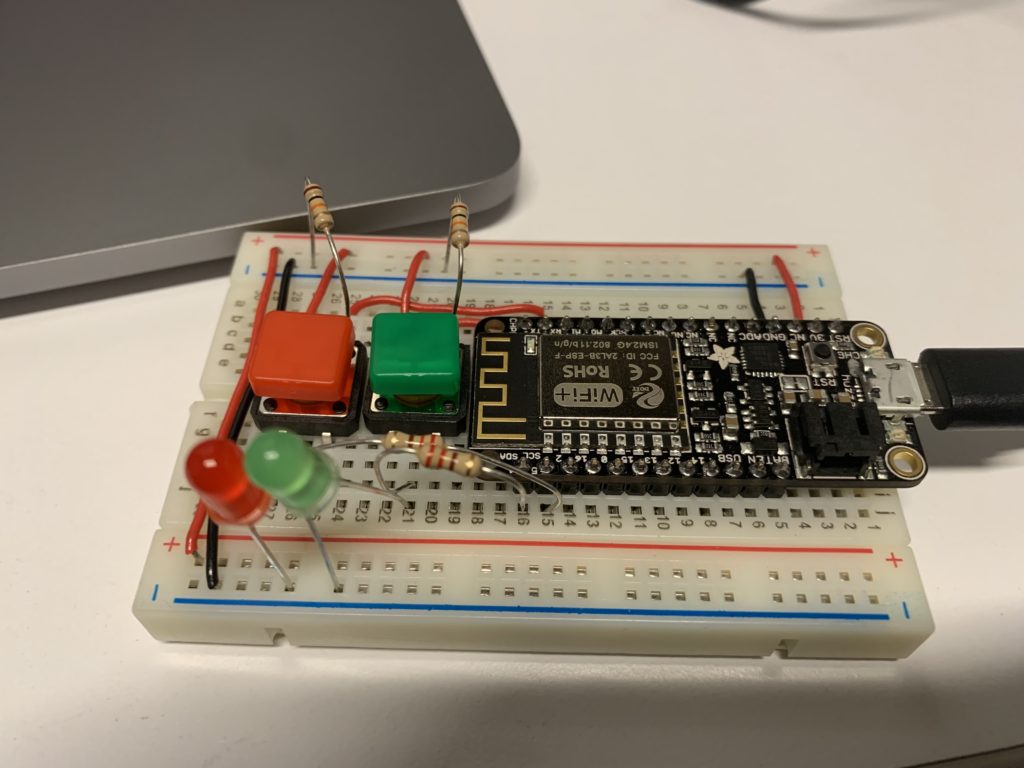

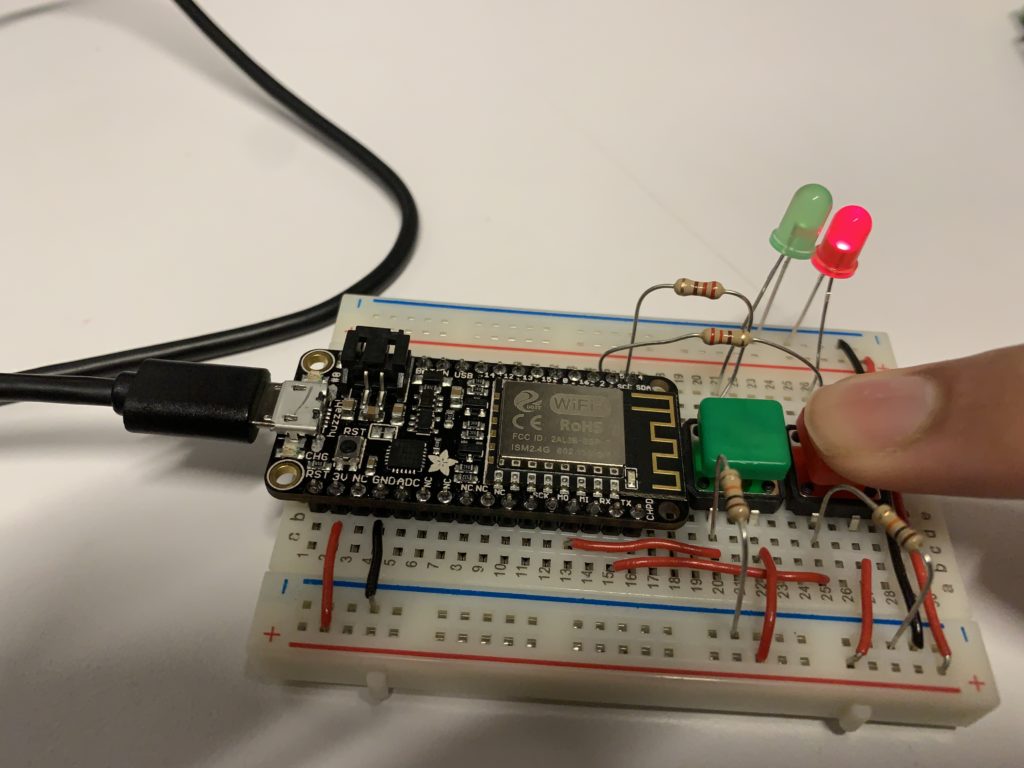

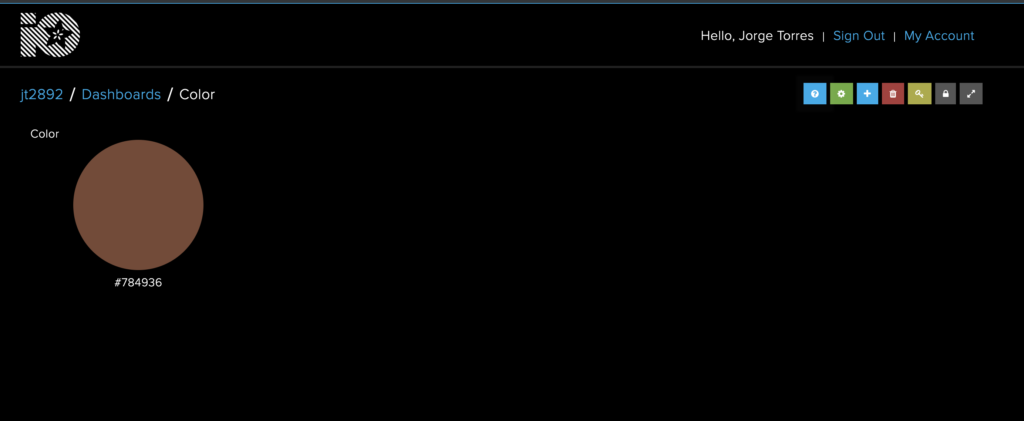

For my final project, I built a color sensor that would send the color information out to be accessed through a Adafruit.IO dashboard. The microcontroller is connected to TCS34725 RGB color sensor. The sensor collects the RGB value of whatever is put in front of it and that is then relayed to the microcontroller and then to the dashboard.

Initially, I wanted to build something that would use a phone’s camera to detect the color of an object. But after Professor Scott sent me the link to the color sensor, I was able to see the vast amount of resources for the sensor from Adafruit. Working with the sensor was cumbersome, even with these resources. There was a lot of “figuring out” how to use the sensor initially. One of the major problems I had was figuring out how to send all the data as the correct data type to Adafruit.IO in order to use the dashboard. Next steps for this project would be to build out the physical interface part. I used a small box to store the circuit, but I would like to build a proper enclosure and connect a battery so the circuit could be taken on the go. Another iteration would also include a way to store the color information more easily. At this time, the color information is shown on the dashboard but changes every 3 seconds.

[code]

#include "config.h"

#include <Wire.h>

#include "Adafruit_TCS34725.h"

// default PWM pins for ESP8266.

// you should change these to match PWM pins on other platforms.

#define RED_PIN 4

#define GREEN_PIN 5

#define BLUE_PIN 2

// set up the 'color' feed

AdafruitIO_Feed *color = io.feed("color");

Adafruit_TCS34725 tcs = Adafruit_TCS34725(TCS34725_INTEGRATIONTIME_50MS, TCS34725_GAIN_4X);

void setup() {

// start the serial connection

Serial.begin(115200);

// wait for serial monitor to open

// connect to io.adafruit.com

Serial.print("Connecting to Adafruit IO");

io.connect();

// set up a message handler for the 'color' feed.

// the handleMessage function (defined below)

// will be called whenever a message is

// received from adafruit io.

//color->onMessage(handleMessage);

// wait for a connection

while(io.status() < AIO_CONNECTED) {

Serial.print(".");

delay(500);

}

if (tcs.begin()) {

Serial.println("Found sensor");

} else {

Serial.println("No TCS34725 found ... check your connections");

while (1); // halt!

}

// we are connected

Serial.println();

Serial.println(io.statusText());

color->get();

// set analogWrite range for ESP8266

#ifdef ESP8266

analogWriteRange(255);

#endif

}

void loop() {

// io.run(); is required for all sketches.

// it should always be present at the top of your loop

// function. it keeps the client connected to

// io.adafruit.com, and processes any incoming data.

float red, green, blue;

// tcs.setInterrupt(false); // turn on LED

delay(3000); // takes 50ms to read

tcs.getRGB(&red, &green, &blue);

tcs.setInterrupt(true); // turn off LED

//Serial.print("R:\t"); Serial.print(int(red));

//Serial.print("\tG:\t"); Serial.print(int(green));

//Serial.print("\tB:\t"); Serial.print(int(blue));

Serial.print("\t");

String hexi;

hexi = ((String((int)red, HEX)) + (String((int)green, HEX)) + (String((int)blue, HEX)));

//Serial.print("This is sexy:\t"); Serial.print("#"+hexi);

Serial.print("\n");

Serial.print("sending -> ");

color->save("#"+ hexi);

}

// this function is called whenever a 'color' message

// is received from Adafruit IO. it was attached to

// the color feed in the setup() function above.

//void handleMessage(AdafruitIO_Data *data) {

//

// // print RGB values and hex value

// Serial.println("Received:");

// Serial.print(" - R: ");

// Serial.println(data->toRed());

// Serial.print(" - G: ");

// Serial.println(data->toGreen());

// Serial.print(" - B: ");

// Serial.println(data->toBlue());

// Serial.print(" - HEX: ");

// Serial.println(data->value());

//}

[/code]