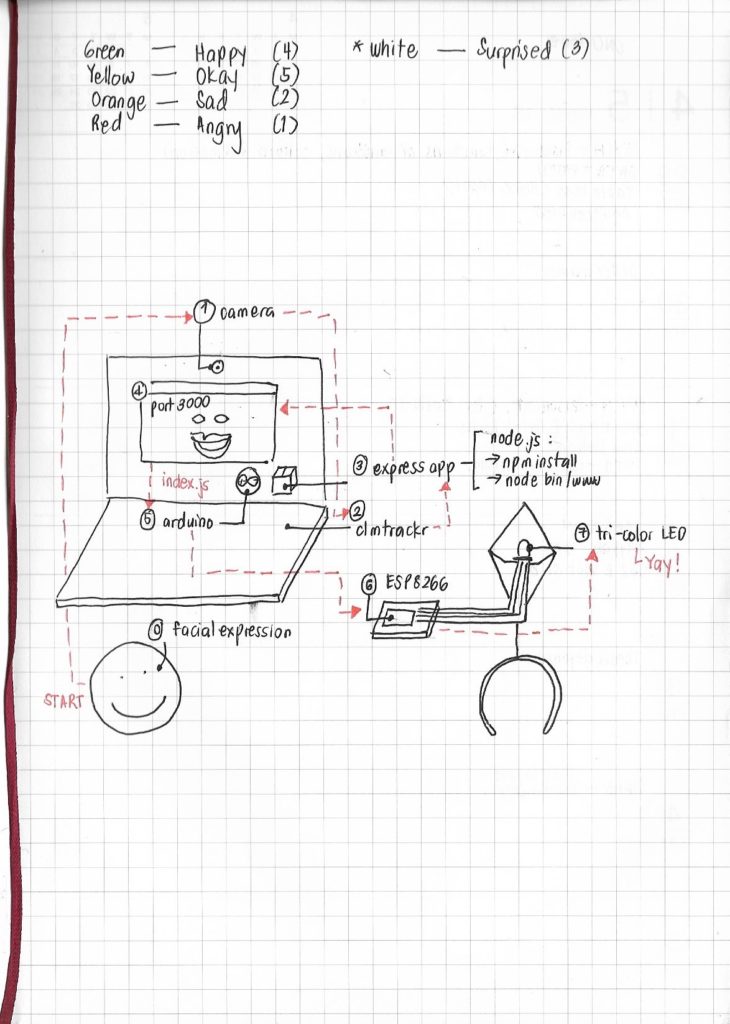

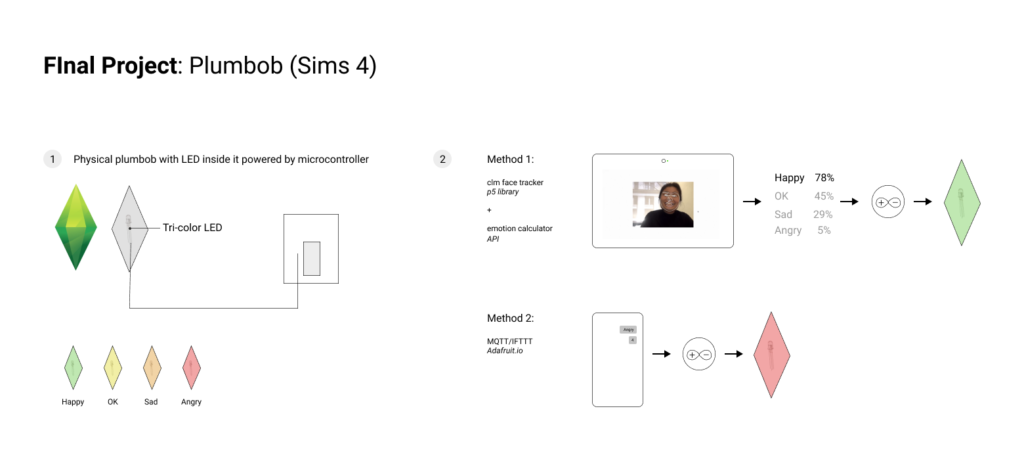

I built and designed a Sims-inspired plumbob that actually responds to a change in one’s facial expression. I used clm trackr, a javascript library that tracks facial expression on images and videos using a face tracking model.

This past week, I worked on:

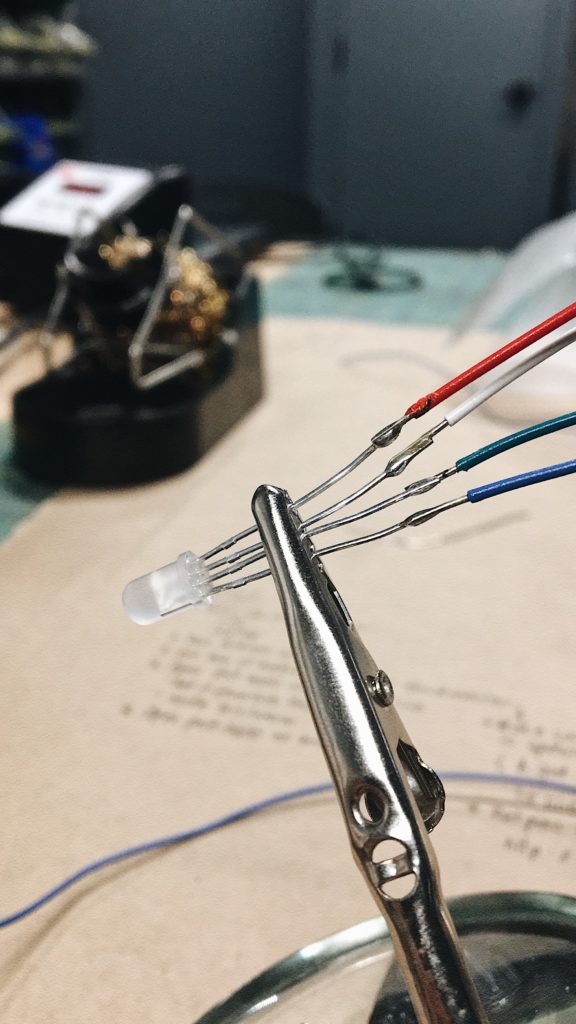

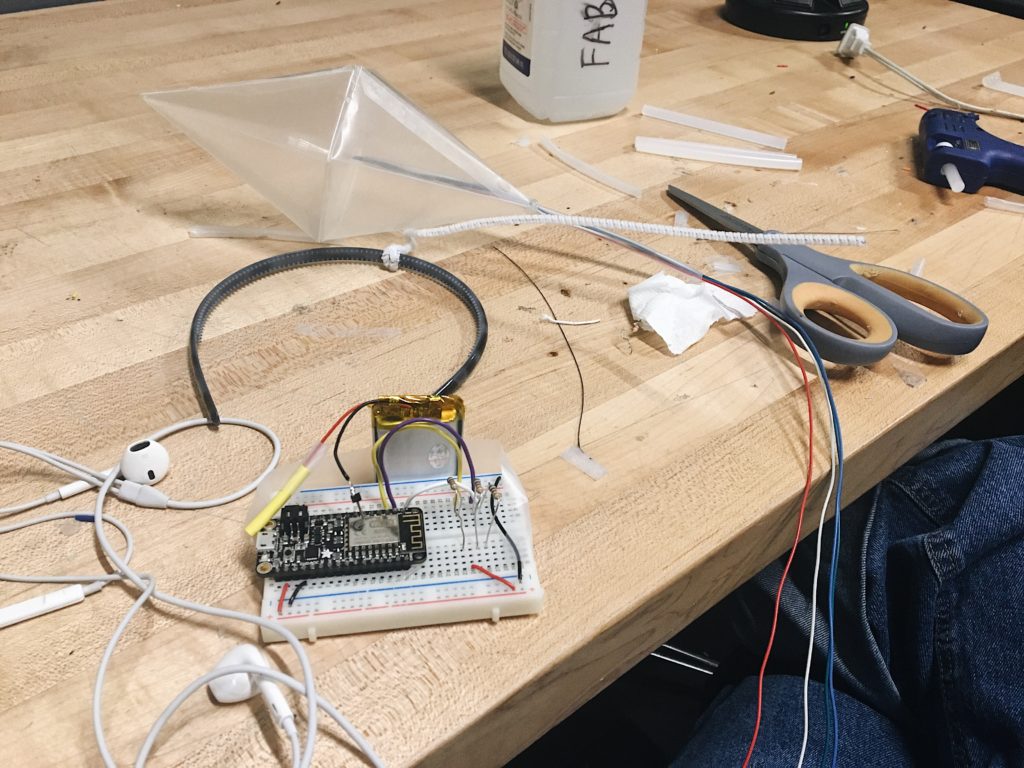

- Hooking the lipoly to the microcontroller via soldering to headers.

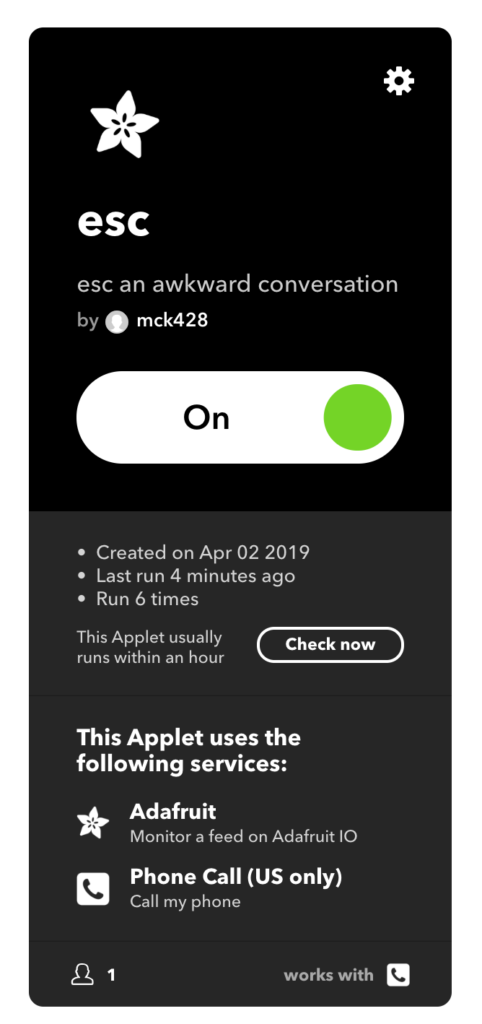

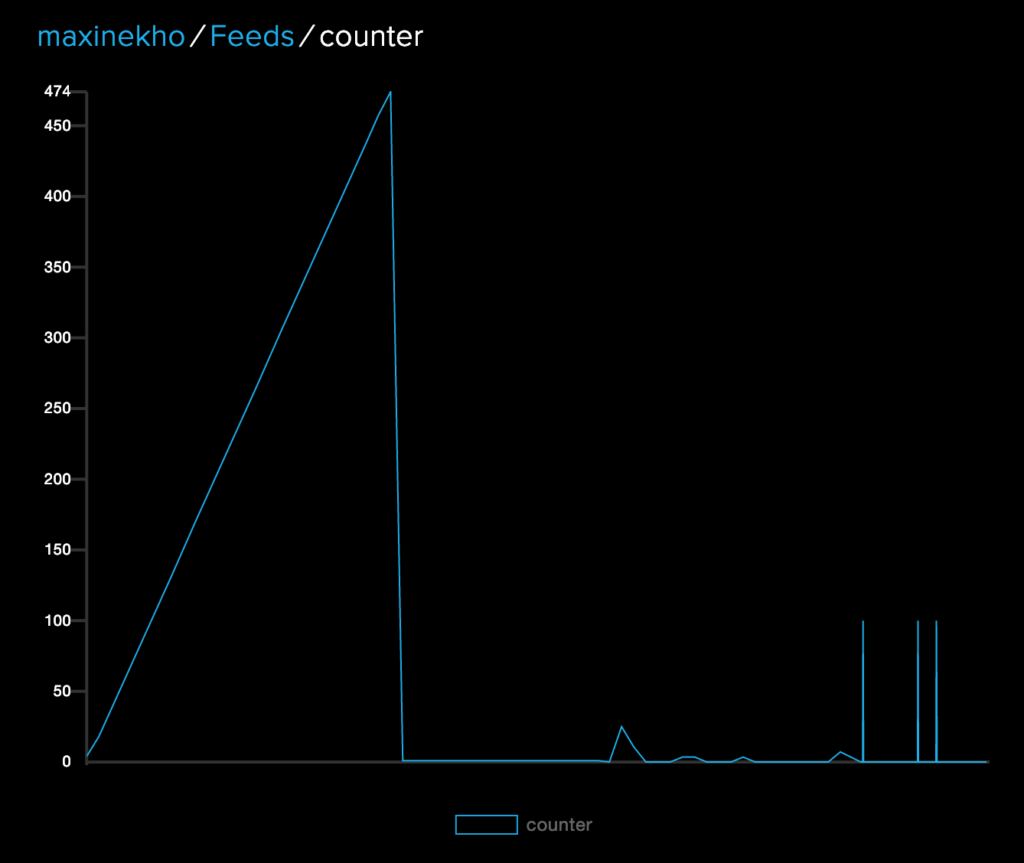

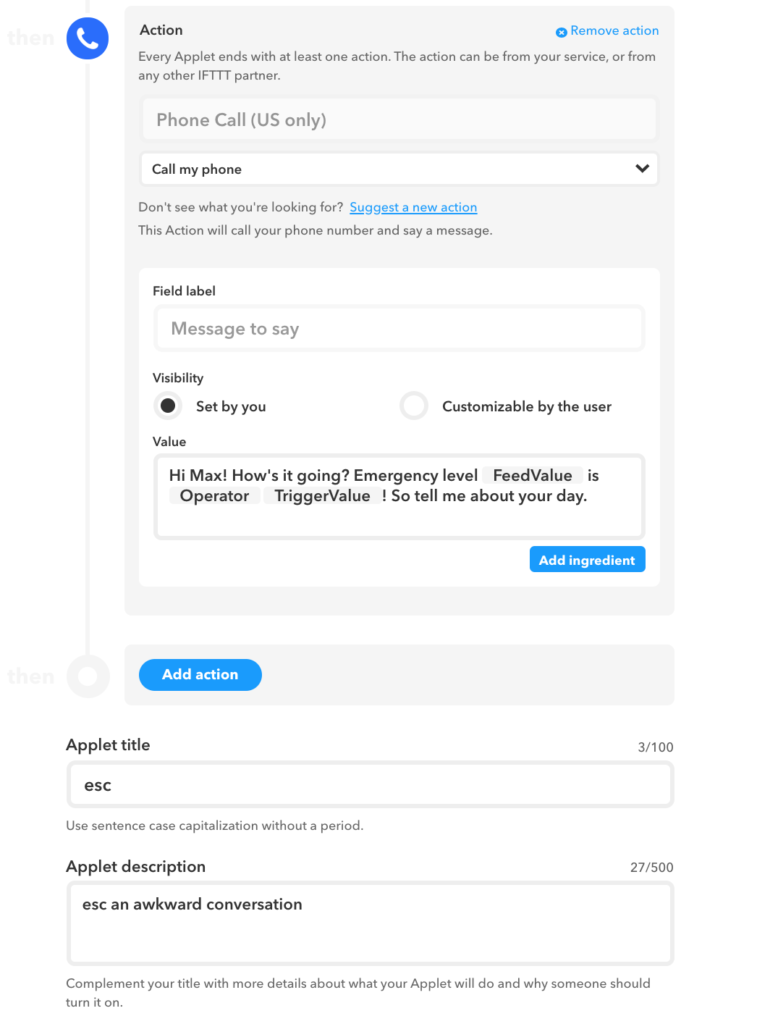

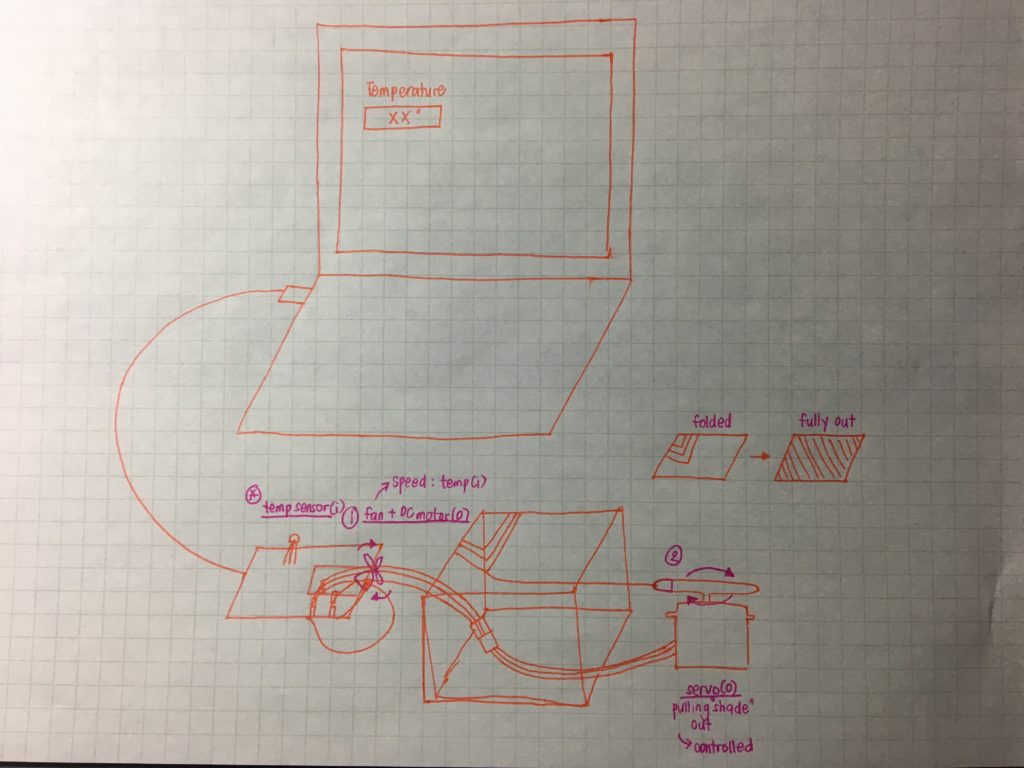

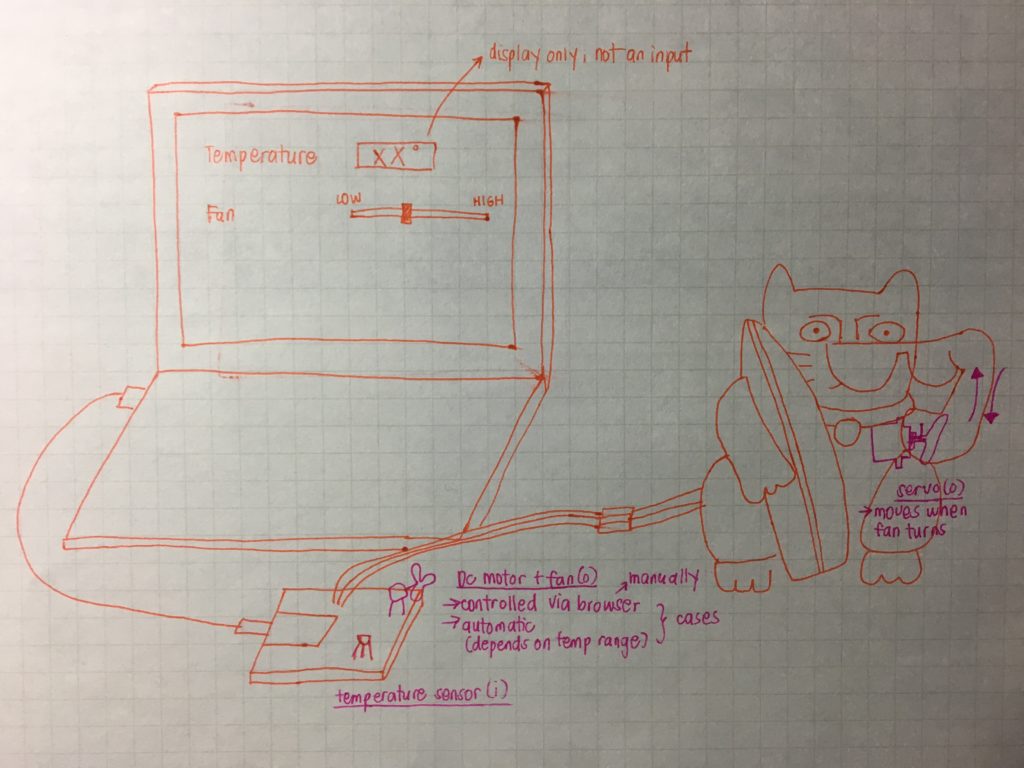

- Communicating to the ESP 8266 wirelessly using REST. This was a helpful refresher for me on how aREST worked. I also gained insight on what syntax looks like for carrying out multiple custom functions that do not require inputs.

- Making an HTTP request in Javascript. Because I was working in Javascript, I had to find a way to make a GET request to update the url every time the model detected a significant change in the person’s facial expression. I ended up using Ajax + jQuery for this after Che Yu explained to me what jQuery does for you!

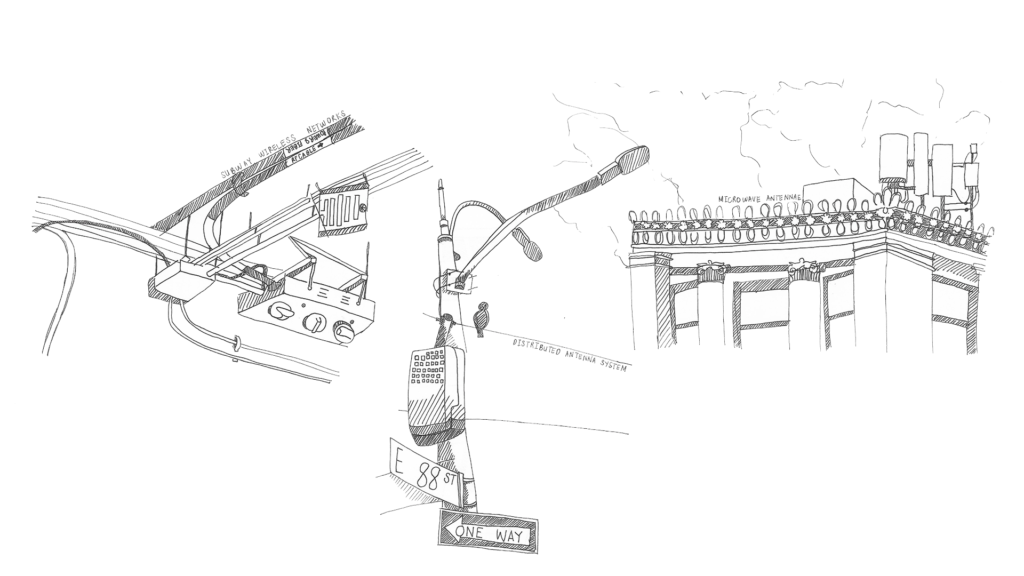

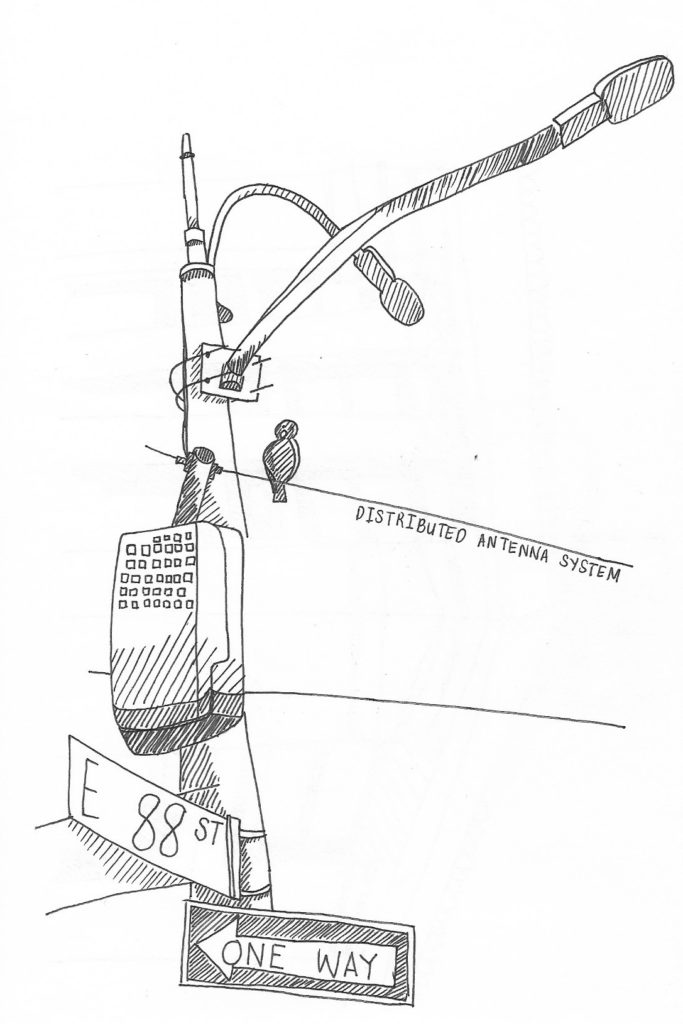

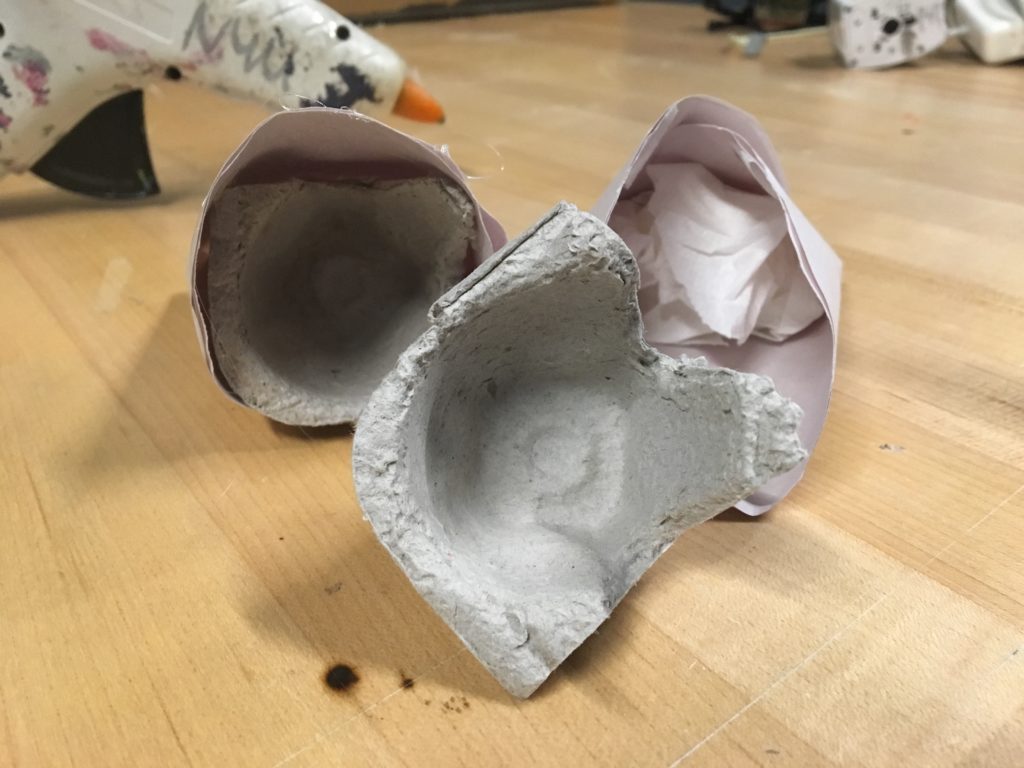

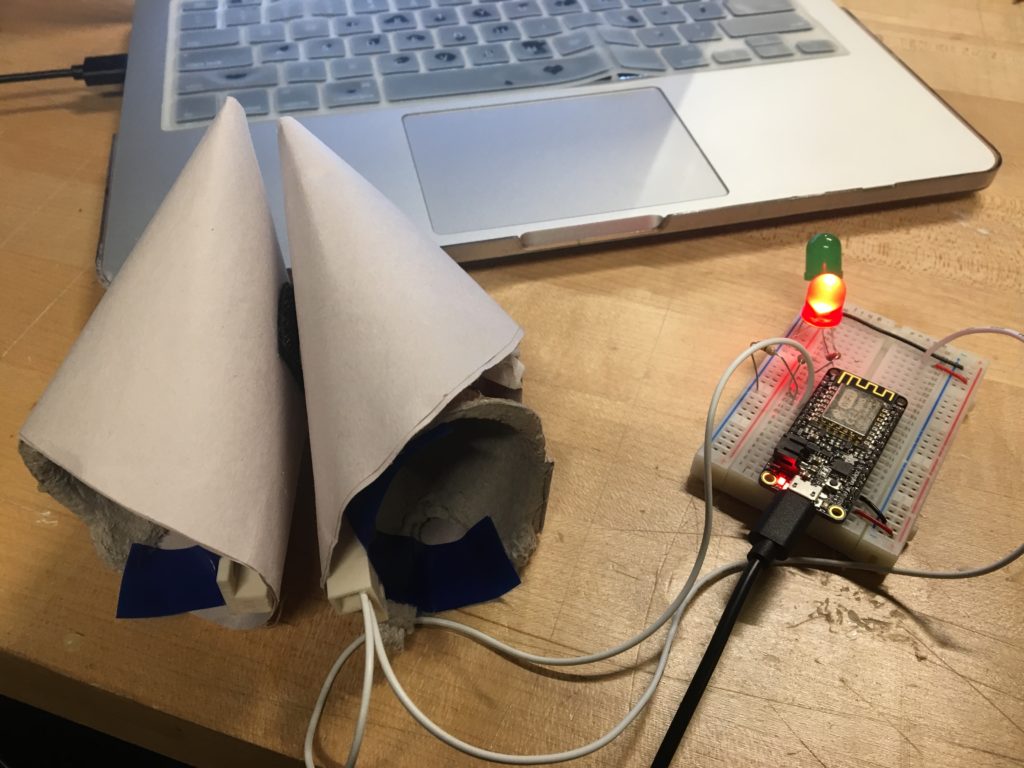

- Designing the physical interface. I spent most of yesterday figuring out, through trial and error, how best to set the device up in such a way that I didn’t need to explain what it was and how to use it, looked farther-from-prototype-looking, while still showing the “insides”– a feature I find very helpful (at least in my experience learning how things work!)

Code here!

Updated documentation: